So right now I am working with the questionnaire that I was hoping to roll out this semester. After more than a year of library studies, I need data! However, with less than a month left of the spring semester and the questionnaire + logistics around it not being ready, the prospects of that happening grow leaner each day, foolishly, I remain hopeful.

I have decided to do an electronic survey for many reasons. Being able to ensure confidentiality (which will improve the reliability of my study) and keep admin to a minimum (which will improve my personal economy) are the top two reasons. I am using an open source software, LimeSurvey, hosted on LimeService for my survey.

Last month, I did a pilot of the first version of the questionnaire. On a Friday, I walked into the ICT building, cleared my voice and asked the students that were sitting there to navigate to my survey URL and fill my questionnaire. 15 students volunteered. Now I am trying to understand what to do with the information I got. (Most of the responding students have not been abroad, however a majority wants to migrate, most for a graduate degree, only one imagines working abroad in five years time. Half of the respondents hold a passport, only one a valid visa, one in five have gotten a visa application rejected, a majority plan to apply for a visa as a student, a majority of those who indicate wanting to migrate say they plan to be away for a shorter period of time (less than 3 years) and most that they will like to return ***Read this write up with caution as the sample is very small and not randomized or controlled in any way*** ).

1. Adding questions – what gaps were left by the current questionnaire?

So far I have come up with that financial situation need better specification, year group (although second year students are targeted it would be interesting to see how answers varies, if at all, for the occational other-year-student) and maybe more questions about visa applications and why they were denied in so many cases.

2. Clarifying questions – were some answers ambiguous because of wording/answering options?

Here I have some work to do. Some questions allow for multiple choice (“check all that apply” etc) others for ranking. I find that sometimes those answers were difficult to analyze. However choosing one option also hides interesting nuances. Hm.

3. Dropping questions – were some questions unnecessary?

I have a bunch of questions on “local” aspirations, coming from the idea that if local labor market opportunities are not advertised as well as the foreign options, the latter look like your only way forward. But as they are not mutually exclusive, now I do not know. Another idea was to similarly tone down migration as “the only option” in my study as to not get biased answers. Does it really further my study on migration aspirations if someone is knowledgeable (or not) about local opportunities? Cutting questions out also allows for space to put new more focused questions in and, even better, not replace all them as to make the questionnaire more brief.

One of the more interesting preliminary “results” is the pie-chart above. When asking students if they had taken the first step toward studying abroad – obtaining information – two out of three students answered yes.

This tells me my study is looking at something relevant for Ghanaian youth.

This post is a Migration Monday post, taking you behind the scenes of doctoral studies in Ghana and giving me the opportunity to write about what I do in less formal language.

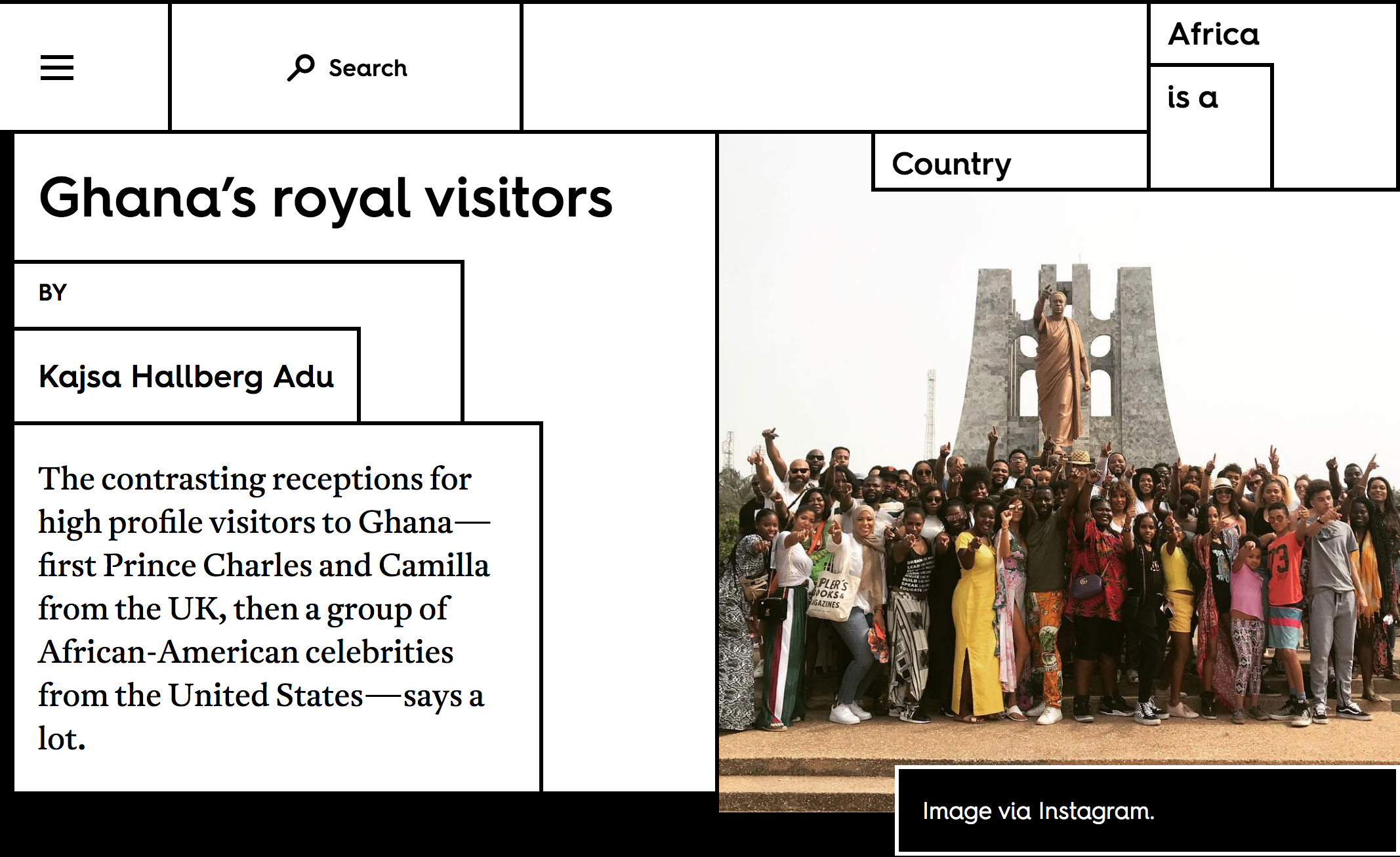

This week, my department, the Institute of African Studies at University of Ghana is organizing a major conference on the theme: “Revisiting the first international congress of Africanists in a globalised world”. The three day conference is apart of the institute’s 50th anniversary celebration and also links to the 1963 convention for Africanists opened by Ghana’s first president Kwame Nkrumah. This conference will be opened by the current president, John Dramani Mahama!

This week, my department, the Institute of African Studies at University of Ghana is organizing a major conference on the theme: “Revisiting the first international congress of Africanists in a globalised world”. The three day conference is apart of the institute’s 50th anniversary celebration and also links to the 1963 convention for Africanists opened by Ghana’s first president Kwame Nkrumah. This conference will be opened by the current president, John Dramani Mahama!